Recently, ACM

Multimedia 2022 was successfully held in Lisbon, Portugal. Li Rui, a PhD candidate

co-supervised by Prof. Bao-Liang Lu and Associate Professor Wei-Long Zheng from

Key Laboratory of Shanghai Commission for Intelligent Interaction and Cognitive

Engineering, Department of Computer Science and Engineering, won the ACM

Multimedia Top paper Award.

ACM Multimedia (CCF A) is the

worldwide premier conference and a key world event to display scientific

achievements and innovative industrial products in the multimedia field. ACM

Multimedia 2022 has a total of 2473 valid submissions, with an acceptance rate

of 27.9%. A total of 13 Top papers were selected, including one Best Paper and

one Best Student Paper.

Background

Affective Brain-computer

Interface has achieved considerable advances that researchers can successfully

interpret labeled and flawless EEG data collected in laboratory settings.

However, the annotation of EEG data is time-consuming and daily collected EEG

data may be partially damaged since EEG signals are sensitive to noise. These

challenges limit the application of EEG in practical scenarios.

Introduction

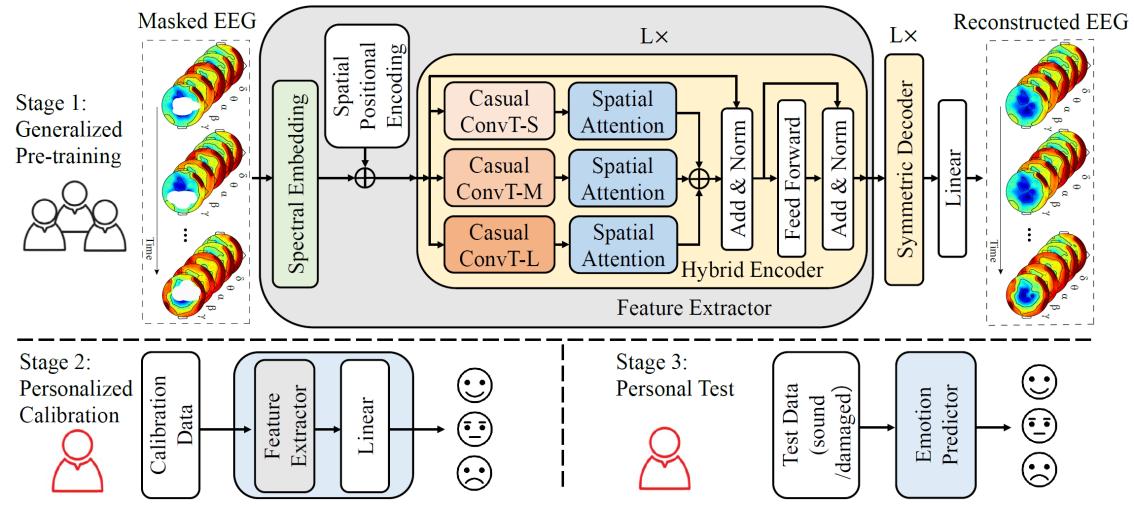

The title of the paper is: ‘A Multi-view Spectral-Spatial-Temporal

Masked Autoencoder for Decoding Emotions with Self-supervised Learning’. In this paper, they proposed a

Multi-view Spectral-Spatial-Temporal Masked Autoencoder (MV-SSTMA) with

self-supervised learning to tackle the realistic challenges of EEG towards

daily applications. The MV-SSTMA is based on a multi-view CNN-Transformer hybrid

structure, interpreting the emotion-related knowledge of EEG signals from

spectral, spatial, and temporal perspectives. The model consists of three

stages: 1) In the generalized pre-training stage, channels of unlabeled EEG

data from all subjects are randomly masked and later reconstructed to learn the

generic representations from EEG data; 2) In the personalized calibration

stage, only few labeled data from a specific subject are used to calibrate the

model; 3) In the personal test stage, our model can decode personal emotions

from the sound EEG data as well as damaged ones with missing channels.

Extensive experiments on two open emotional EEG datasets demonstrate that their

proposed model achieves state-of-the-art performance on emotion recognition. In

addition, under the abnormal circumstance of missing channels, the proposed

model can still effectively recognize emotions.

The overall process of the proposed method.

Paper link:

https://dl.acm.org/doi/10.1145/3503161.3548243